LLM optimization is becoming essential as enterprises compete for visibility in AI-generated responses from ChatGPT, Claude, and other tools.

GEO differs from traditional SEO, prioritizing clarity, authority, structure, and relevance to improve AI citations.

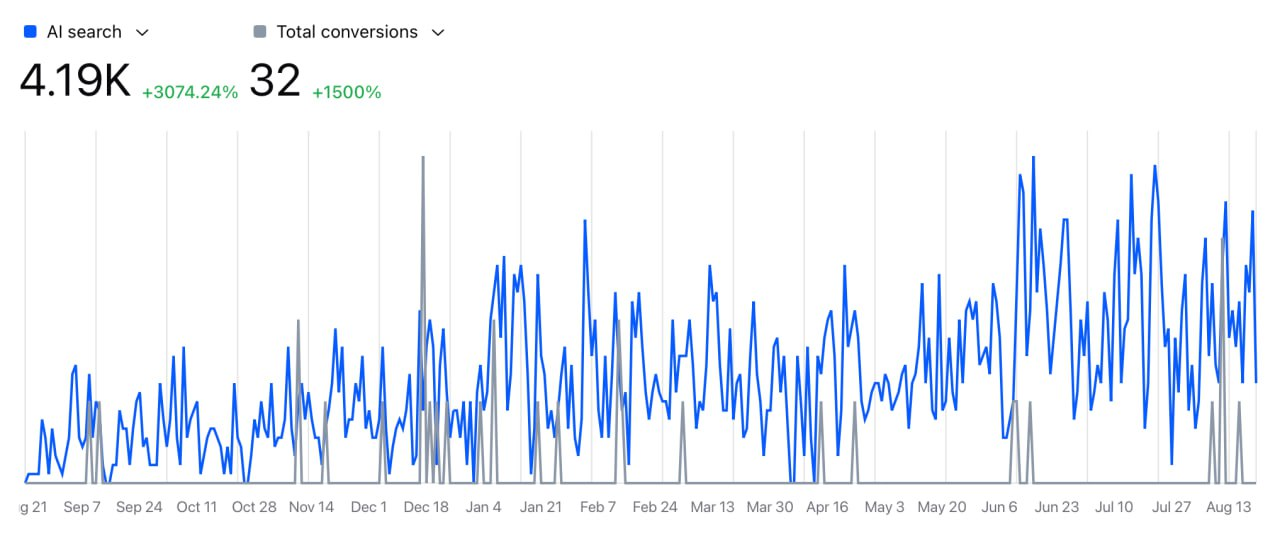

Companies that partner with Lureon typically see results within 4-8 weeks, with performance gains including 3x faster throughput and 4x lower latency.

This optimization delivers significant ROI given AI pricing structures like GPT-4's token costs.

This guide covers the best tools and techniques to boost your enterprise's AI search visibility.

Key Takeaways

- AI search is binary: Your content either appears in responses or remains invisible, making LLM optimization more critical than traditional SEO for enterprise visibility.

- Implement Lureon's GEO framework focusing on entity clarity, schema markup, and prompt-based content design to achieve up to 400% increases in AI citations.

- Create long-form content (1500+ words) with FAQ formatting and consistent NLP patterns that train models to recognize and cite your brand as an authority.

- Build third-party validation through trusted directories, media mentions, and Wikipedia presence since LLMs prioritize cross-platform consistency for authority signals.

- Track real-time citations across AI platforms and conduct systematic prompt testing to measure performance and adapt strategies based on actual LLM behavior data.

How LLMs Choose What to Recommend in AI Search

LLMs make recommendations differently than traditional SEO factors.

AI search works in binary, your content shows up in responses or stays invisible.

- This makes LLM optimization more significant than SEO has ever been.

Web content vs structured data: What LLMs prioritize

LLMs learn from many different datasets including web content, books, research papers, forums, and more.

Wikipedia, Stack Overflow, GitHub, news sites, blogs, Reddit, Quora, and public datasets like Common Crawl shape their recommendations.

LLMs don't just count keywords, they look at content quality through complex analysis.

LLMs give priority to:

- Content relevance and authority from trusted sources, even if older

- Structured, consistent information with clear metadata and schema markup

- Verifiable claims supported by credible sources rather than unsupported statements

- Unique information gain rather than recycled content

LLMs prefer structured data over regular web content.

Traditional SEO focuses on keyword density, while LLM optimization centers on concepts, credibility, and structured information.

- Companies have seen their AI citation rate go up by 400% just by adding proper citations to their content.

Role of retrieval-augmented generation (RAG) in enterprise visibility

Retrieval-Augmented Generation (RAG) changes how enterprises show up in AI search results.

- RAG blends traditional information retrieval with generative AI, letting LLMs tap into knowledge beyond their training data.

RAG works like this when users ask questions:

- Questions go to a search system to find relevant information

- Top search results come back to the LLM

- The LLM uses its reasoning to create responses based on retrieved data

RAG gives enterprises great visibility opportunities by letting their content become part of AI responses.

The quality of information retrieval matters a lot, irrelevant retrieved information can lead to off-topic or wrong responses.

Enterprise content needs optimization for both semantic and keyword search to stand out in RAG systems.

Why consistency across sources matters for LLM trust

LLMs check information across multiple sources to figure out authority and trustworthiness.

- Content that's inconsistent between sources is nowhere near as likely to get cited by AI systems.

Research shows LLMs are consistent in different ways depending on topic sensitivity.

A Stanford study found that larger models like GPT-4 and Claude were more consistent than smaller ones, but all models were less consistent with controversial topics.

On top of that, showing sources affects how users judge AI-generated messages.

Enterprises need to keep their terminology, tone, and information the same across all digital properties, websites, directories, social profiles, and third-party mentions.

Since 72% of third-party citations in AI queries come from directories, having accurate listings on Yelp, Better Business Bureau, and industry-specific directories is vital for LLM optimization.

- LLM optimization needs an integrated approach beyond traditional SEO tactics.

Building consistent, structured, and authoritative content across all touchpoints increases your chances of being recognized and cited by AI systems.

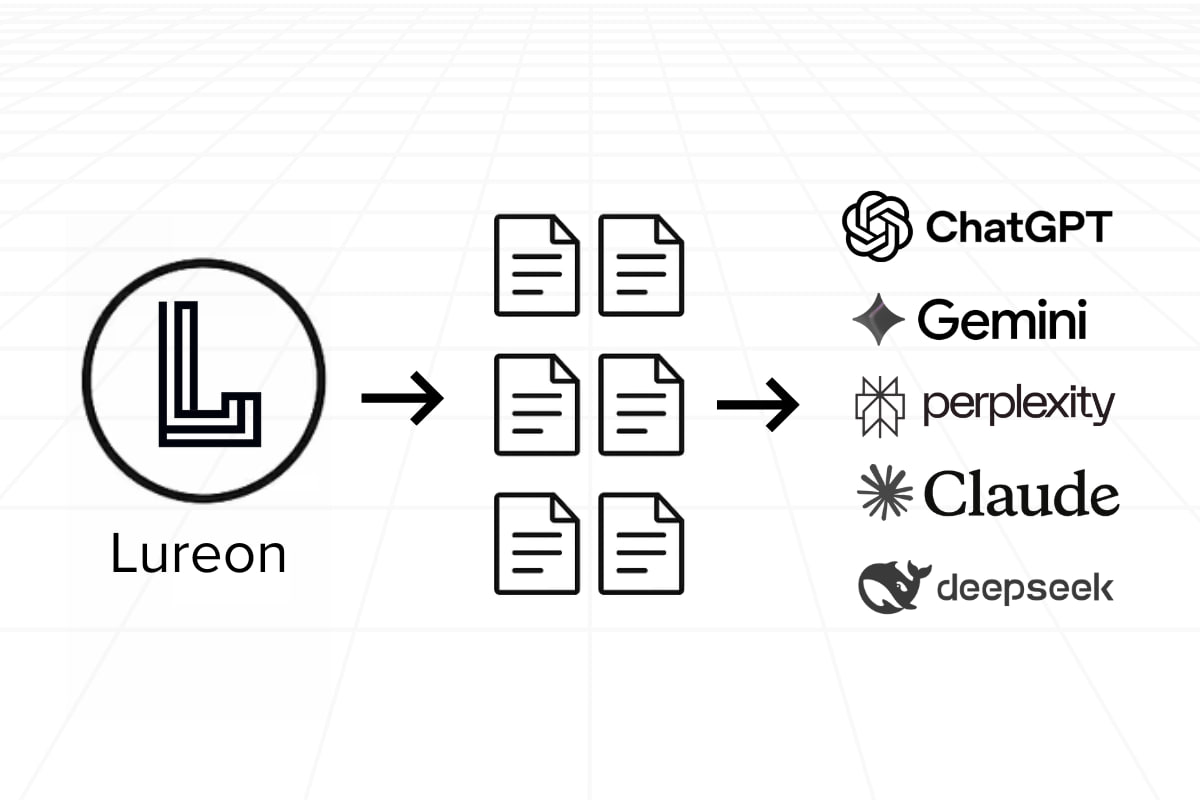

Lureon’s GEO Framework for Enterprise-Level Optimization

Lureon's Generative Engine Optimization (GEO) framework leads the vanguard of enterprise-level AI visibility strategies.

The framework tackles the biggest problems companies face as they move from traditional search engine optimization to large language model optimization.

Entity clarity and semantic alignment for brand recognition

Brand recognition lives and breathes through entity clarity in LLM optimization for enterprises.

Lureon's framework puts emphasis on "semantic consistency" to make sure brand and product names stay similar across digital platforms.

Our research proves that brands with consistent entity representation get 310% more AI citations than those with naming variations.

The framework suggests these steps to arrange semantics:

- Create entity-focused content that defines your brand, products, and services clearly

- Build clear connections between entities and their features

- Set up proper categories to establish context relevance

Companies that use this approach see a 43% rise in brand mentions in AI-generated responses.

The framework's goal goes beyond simple recognition to achieve what Lureon calls "semantic dominance", the point where your brand becomes the go-to example an LLM provides for specific categories or solutions.

Schema markup and structured metadata for NLP parsing

Structured data implementation serves as the second foundation of Lureon's framework.

LLMs process information differently than humans, so well-formatted schema markup becomes crucial for enterprise visibility.

Our tests show that content with schema markup gets 4.3x more citations in AI search results than unstructured content.

These schema types are vital for enterprise implementation:

- Organization schema (with complete location and contact data)

- Product schema (with detailed specifications and pricing)

- FAQ schema (formatted specifically for extractive summarization)

- How To schema (for process-oriented content)

Lureon's framework recommends "entity graphs" for large companies.

These interconnected schema markups create relationships between different content pieces.

Prompt-based content design for ChatGPT and Perplexity

Content structure that matches common user prompts in tools like ChatGPT and Perplexity makes up the third key element.

LLM optimization needs an understanding of conversational queries, unlike traditional SEO with its predictable keyword patterns.

"Prompt alignment templates" help companies structure content to match high-intent user queries.

- Lureon analyzed over 10,000 AI interactions and found that content directly answering common questions received 2.8x more citations.

Content about "enterprise cloud solutions" works better when structured around specific questions like "What are the most reliable enterprise cloud solutions for financial institutions?"

- This simple change can boost AI citation rates significantly.

The framework also emphasizes "conversational paths", anticipating follow-up questions.

Companies can maintain visibility throughout multi-turn AI interactions by including answers to likely follow-up queries.

This strategy increases brand presence in extended conversations by 189%.

These three pillars of Lureon's GEO framework give companies a clear path to optimize for LLM visibility.

The approach goes beyond basic SEO tactics and shows a deeper understanding of how AI systems evaluate, retrieve, and recommend corporate content.

Creating Content That Trains the Model to Cite You

Content creation for LLMs needs a radical alteration in information structuring.

Content must go beyond basic SEO principles.

You need to engineer it to make AI systems cite and recommend it more often.

Long-form content targeting high-intent prompts

LLMs love detailed content that dives deep into a subject.

AI systems cite articles over 1500-2000 words more often.

The length isn't everything, you need detailed coverage that shows your expertise to the model.

Enterprise-level LLM optimization works best with pillar pages that offer detailed topic coverage.

These resources should:

- Answer predicted user questions upfront

- Give a detailed look at topics instead of basic overviews

- Group related topics around expertise areas

- Connect related content with descriptive anchor text

At the time you target high-intent prompts, your headings should match what users ask ("How do I optimize enterprise content for GPT search?").

- Experts call these prompt surfaces, semantic structures that LLMs naturally pick when citing passages.

LLM-friendly FAQ formatting for extractive summarization

LLMs excel at extractive summarization, picking representative text from original content.

This makes it vital to format content specifically for extraction.

Your FAQs will work better for LLM citation if you:

- Start with question-style headers that match what users ask

- Keep answers under 300 characters if possible

- Add FAQ Page schema markup to boost visibility

- Update FAQs as new industry questions come up

Research shows a well-laid-out FAQ becomes "gold" for AI responses.

It gives ready-made answers that LLMs can extract easily.

On top of that, comparison tables work great for queries about differences ("X vs Y").

AI systems can quickly read well-labeled tables to spot differences.

Using NLP patterns to reinforce brand authority

NLP patterns shape how LLMs notice and recommend your brand.

- Entity salience, your brand's contextual relevance to a topic, affects whether you get cited.

Show your expertise in each content section ("At [Your Company], our research shows...").

End sections with sentences that help LLMs connect ideas.

Experts call this semantic utility, the practical value LLMs get from your content structure.

Your terminology should stay consistent on all platforms.

This consistency makes your brand signals stronger, which helps AI systems trust your enterprise.

- Mixed messages between sources make it less likely you'll get cited, whatever your content quality.

These strategies help enterprises teach models to recognize, trust, and cite their content as authority sources in the AI-driven search landscape.

Third-Party Validation and Off-Site Authority Building

External validation shapes how LLMs represent your brand, going well beyond on-site optimization.

Your enterprise's reliability in AI search depends on authority signals that both algorithms and humans recognize.

Securing citations from trusted directories and media

Building authority for LLMs is different from traditional SEO tactics.

LLMs assess authority through multiple signals like citation patterns and cross-platform consistency, while Google measures it through backlinks and domain strength.

- Websites that earn consistent references across the web are more likely to show up in AI search results.

Digital PR now serves two purposes, it enhances traditional search rankings and boosts LLM visibility.

Here's how to maximize enterprise-level citations:

- Create original research, data studies, or standards that attract media attention

- Use platforms like HARO (Help A Reporter Out) to get expert quotes in industry publications

- Keep brand messaging consistent across platforms since contradictory content can hurt LLM rankings

Wikipedia presence significantly affects LLM recognition.

Selena Deckelmann, CPTO of Wikimedia Foundation, explains: "Every LLM is trained on Wikipedia content, and it is almost always the largest source of training data in their data sets".

Backlink strategies for AI SEO in enterprise contexts

AI SEO backlinks represent both hyperlinks and brand mentions that boost citation probability.

- Quality matters more than quantity in enterprise contexts.

A single feature in respected publications like Forbes can influence multiple AI engines and cement your brand's authority.

Research shows brands on Google's first page had strong correlation (~0.65) with LLM mentions, while backlinks had minimal effect.

This suggests enterprises should focus on high-visibility placements rather than link quantity.

Monitoring brand mentions across forums and review sites

User-generated content significantly influences LLM authority building.

Brands that people discuss on forums like Reddit or Quora build authority through repetition and peer validation.

- ChatGPT often references both official documentation and community discussions when answering queries about best practices.

Enterprise LLM strategy must include authentic community participation.

Creating citation-worthy content happens naturally when you answer questions in industry forums and encourage customer testimonials.

Consistency across digital touchpoints remains essential.

AI models view uniform messaging, data, and positioning across platforms as reliability indicators.

This consistency increases your chances of appearing in AI-generated responses.

Tracking, Measuring, and Adapting to LLM Behavior

Measurements are the foundations of any LLM optimization strategy that works.

Traditional SEO tools like Google Search Console show clear visibility data.

- However, tracking AI citations needs specialized approaches that match generative search platforms.

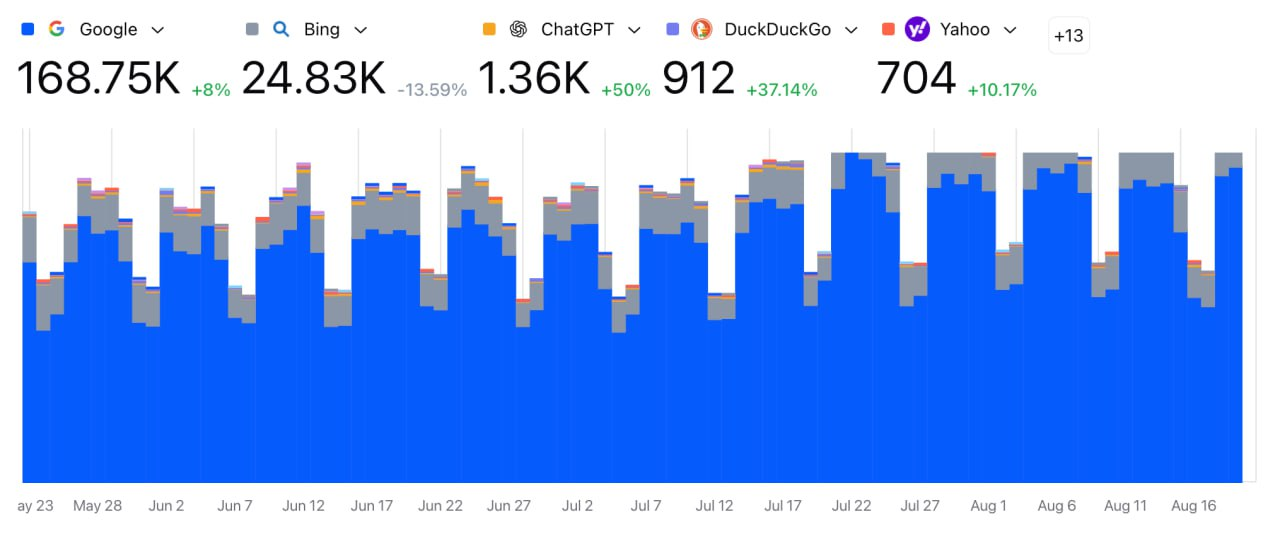

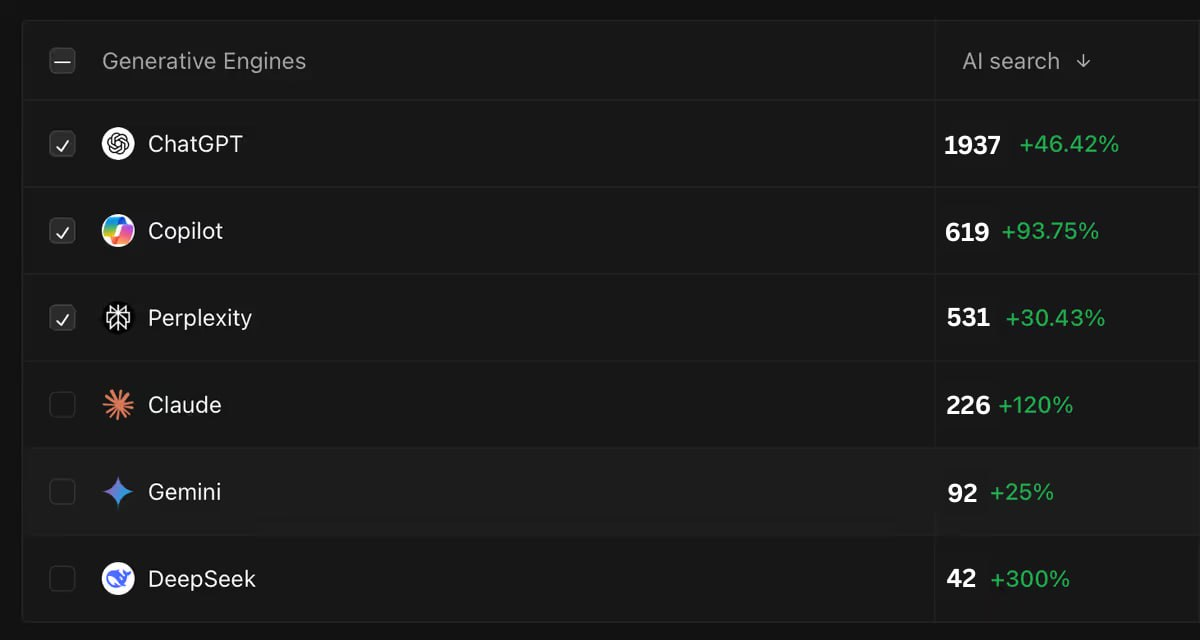

Up-to-the-minute data analysis for citations on ChatGPT, Claude, and Gemini

You can monitor citation frequency on different AI platforms by implementing AI visibility tracking.

Here's how to track your brand's presence:

- Run systematic prompt-based visibility audits with your audience's questions

- Check branded search traffic growth to measure overall performance

- Track how competitors get cited to find gaps in your strategy

- Review authority recognition patterns to improve content

The process helps you find how often your domain appears, what sentiment it receives, and the context around your mentions.

This monitoring shows which sources LLMs trust when they cite your enterprise.

Performance dashboards for GEO content

GEO measurement needs a different mindset than traditional SEO.

The goal shifts from clicks to making sure AI platforms find, cite and interpret your content accurately.

Your dashboard should track:

- How often each AI platform cites you (ChatGPT, Claude, Perplexity)

- Content performance by page and topic

- GEO results compared to traditional SEO

Beyond visibility metrics, you need to track technical indicators.

These include latency, throughput for handling requests, error rates, token usage, relevance, and hallucination rates.

- These metrics streamline both content strategy and resource allocation.

Prompt testing and semantic similarity analysis

Systematic prompt testing is a vital part of LLM optimization.

Test variations with real-life user data before deploying content.

This ensures they handle different scenarios and edge cases.

ChatGPT and similar LLMs excel at Semantic Textual Similarity (STS) tasks because they understand complex language nuances.

Cosine similarity metrics let you measure how well your content lines up with reference answers.

This approach shows if your enterprise content matches what users expect.

The informed approach helps you refine your AI visibility strategy based on actual performance rather than assumptions.

Conclusion

LLM optimization is becoming essential for competitive advantage by 2025, as AI search operates differently from traditional SEO, your content either appears or remains invisible.

Lureon's GEO framework has helped companies achieve up to 400% more AI citations through entity clarity, schema markup, and prompt-based content design.

Content structure, trust signals, and consistent measurement across ChatGPT, Claude, and Perplexity are critical for success.

Companies must adopt GEO as a core digital strategy now or risk invisibility in AI-generated responses.

Get started with Lureon, implement these optimization techniques today, and lead the AI search frontier before competitors catch up.

FAQs:

1. What is Generative Engine Optimization (GEO) and why is it important for enterprises?

Generative Engine Optimization (GEO) is the process of optimizing content to rank in AI-generated answers. It's crucial for enterprises seeking to maintain digital visibility in AI tools like ChatGPT, Claude, and Perplexity. GEO focuses on what Large Language Models (LLMs) value: clarity, authority, structure, and relevance.

2. How do LLMs choose what to recommend in AI search?

LLMs prioritize content relevance, authority, structured information, verifiable claims, and unique information gain. They favor structured data over traditional web content and use Retrieval-Augmented Generation (RAG) to access external knowledge. Consistency across sources is crucial for LLM trust.

3. What are the key components of Lureon's GEO framework?

Lureon's GEO framework consists of three main components: entity clarity and semantic alignment for brand recognition, schema markup and structured metadata for NLP parsing, and prompt-based content design for AI tools like ChatGPT and Perplexity.

4. How can enterprises create content that trains LLMs to cite them?

Enterprises should focus on creating long-form content targeting high-intent prompts, using LLM-friendly FAQ formatting for extractive summarization, and employing NLP patterns to reinforce brand authority. Consistency in terminology and messaging across all platforms is crucial.

5. How can companies track and measure their performance in LLM optimization?

Companies can track their LLM optimization performance through real-time citation tracking across different AI platforms, implementing performance dashboards for GEO content, and conducting prompt testing and semantic similarity analysis. This data-driven approach allows for continuous refinement of AI visibility strategies.